NCSA: So, tell us about Photran…

TJP2: My pleasure. Photran is a plug-in for the Eclipse IDE that provides support for Fortran development.

NCSA: …and Eclipse is?

TJP2: Yes. Eclipse is an open-source platform for building IDEs that was originally developed at IBM. It’s a descendent of their VisualAge for Java product. That product was originally written in Smalltalk. As the Java juggernaut picked up steam, IBM felt compelled to re-write it in Java. They subsequently renamed this product Eclipse.

NCSA: And made it all open source?

TJP2: Yes, they “open-sourced” the entire codebase. This struck some as a bold gambit at the time, but Eclipse has turned into the finest IDE on “the market” for Java development. It’s pretty much brought about a mass extinction among competing IDEs. How do you compete with free, after all?

NCSA: So Eclipse is a free IBM product?

TJP2: Not anymore. It’s still free, of course, but IBM spun the Eclipse effort off to an autonomous non-profit entity, the Eclipse Foundation, so as to not have it be seen as in competition with other vendors. It’s ironic though, IBM has been doing a better job of support Java than Sun itself in some respects. I wish they’d co-operate more. I wouldn’t mind seeing IBM acquire Sun, truth be told :-) At this point, they seem like a good match. But I’m a programmer, not an investment banker, so take this with a grain of salt.

NCSA: Agreed. Um, …a “plug-in”? What’s that mean? It sounds so convenient.

TJP2: It is. Actually, all Eclipse is is a collection of plug-ins. Plug-ins are medium grained, relatively autonomous Java components. They are woven together using a layer of XML glue, using a relatively modest collection of high-level integration conventions. This style of embedding more coarsely grained black-box components using mortar like XML (or Python, or Perl) is decidedly post-modern – Actually, Mosaic was one of the first high-profile exemplars of this style, if you ask me, though their “glue layer” was a thin coat of sloppy C code that bound together a hodge-podge of existing utilities. The rest is history, as you know.

NCSA: …Ahem. plug-ins?

TJP2: So anyway, Eclipse is nothing more than a constellation of hundreds and hundreds of plug-ins, flying in close formation (to mix metaphors). Indeed, IBM’s popular WebSphere product is nothing more than a large collection of plug-ins that run on top of Eclipse. IBM has made quite a lucrative business out of selling services for WebSphere. It’s as if GM were giving away cars, and making its money by selling gasoline and providing mechanics. But I digress.

In any case, this architecture means that anyone who wants to write their own plug-ins will see them integrated into Eclipse with all the fit, finish and flair of the existing plug-ins. After all, Eclipse itself is nothing but plug-ins, and reflective glue. And since it is open-source, you have lots of worked examples in front of you, and can use the source for existing plug-ins as the basis for writing your own.

NCSA: …and that’s how you built your Photran plug-in?

TJP2: Yes. Let me get back to your original question. Photran is a Fortran plug-in (or, to be precise, collection of plug-ins) for Eclipse. Like the rest of Eclipse, it is itself written in Java. We built it by taking another set of plug-ins, the CDT (C Development Toolkit), and then hacked away at it. Our ultimate goal is to provide facilities for Fortran programmers that meet the same standards as those that Eclipse is providing for Java programmers. Right now, we provide CVS repository management, Fortran code editing with syntax highlighting, powerful search-and-replace facilities, and code outlining. But what we really want to do is be able to refactor Fortran as easily, or more easily, as you can now refactor Java using Eclipse.

NCSA: “Refactor”? What on Earth is refactoring? I’ve never heard of it.

TJP2: Our party line is that a refactoring is a “behavior preserving program transformation”. That sounds pretty clinical, doesn’t it? By “behavior preserving” we mean that it is a change that doesn’t change the way the program works.

NCSA: So what's the use in make a change to a program if it doesn’t change the way it works? That sounds like a waste of time.

TJP2: I demur. For example, you might discover that it would be easier to discern the intent of a program were somehow, as if by magic, all the occurrences of a variable, say “K”, were replaced with the name “SUM”.

NCSA: Couldn’t you do that with any editor?

TJP2: You could try, but depending upon how your program was written, you’d have to tread carefully, because any number of variables might be named “K”, and you’d only really want to change one specific incarnation of “K”.

NCSA: So a refactoring tool has to be smarter than an editor?

TJP2: Exactly. A refactoring tool knows about the structure, syntax, and semantics of its target language, and can guarantee that only occurrence of the specific copy of “K” you’d selected is refactored. This is the kind of thing that distinguishes simple code editing from refactoring: our substitution of “K” with “SUM” can be made as a first-class program transformation.

NCSA: So you can guarantee that you won’t screw your code up?

TJP2: Yup. That’s the beauty of refactoring. That’s where the “program transformation” part of “behavior preserving program transformation” comes in. We can bring some fancy computer science programming language theory to bear on the problem and show that our transformations do what they are supposed to do, only what they are supposed to do, and don’t break anything else in the process. This takes a tremendous burden off the programmer. It’s an amazing amenity.

NCSA: Well, renaming variables is nice, but…

TJP2: Yeah, another example, one of my favorites, is what the Java folks call the “Extract Method” refactoring. We’ll call it something like “Extract Procedure” in Fortran. This refactoring allows you to select a code passage in your source program, and in one fell swoop, convert that code into a free-standing method or subroutine, replacing it and any other occurrence of that code fragment with properly constructed invocations of the new routine. Your jaw drops the first time you see it in action. So, back to your earlier question: neither of these manipulations changes the way the code works one whit. But they make it easier to read, and thereby potentially easier to change. The computer itself doesn’t really care about these changes, but programmers who have to work with the code certainly do.

NCSA: So, are refactorings mainly cosmetic manipulations?

TJP2: You might think so in the case of giving variables new names. It must sound like high-tech copy editing to you. In the case of extracting routines, though, the changes are more than skin deep. You are improving the code’s structure. Moving duplicated, difficult to read code into one place at once makes the code easier to maintain, easier to understand, and easier to reuse. The structure of the entire application becomes more modular, as redundancy subsides, and autonomous modules, routines and data structures emerge. It feels a bit like rehabilitating a run-down house, or gentrifying a downtrodden neighborhood.

NCSA: All the software buzz-junkies are high on the likes of Python, Java, and even XML these days. Why Fortran? It sounds so retro; so old-school…

TJP2: We became interested in Fortran when it became to impinge upon our consciousness again from two directions at once. On one hand, we were given the opportunity by NASA to try to take what we’d learned over the years about object-oriented software engineering, frameworks, and architecture to a large high-performance Fortran framework for simulating Gamma Ray Bursts in Type 1a Supernovae. This framework, IBEAM, was written primarily in Fortran. Actually, one of its principal authors is at NCSA now: Prof. Paul Ricker. Maybe you know him.

NCSA: Um, I might. And on this other hand?

TJP2: Yes, at around the same time, DARPA and IBM became interested in ways of improving the productivity of programmers in high-performance computing by an order-of-magnitude, under the aegis of their PERCS program. And, as your readers are surely aware, Fortran still maintains quite the presence in the high-performance arena.

NCSA: And you think refactoring can really improve the performance of Fortran programmers this dramatically?

TJP2: Of all the technologies in the program, I believe refactoring has then potential to produce a larger immediate benefit than any of the others. Indeed, I think full-featured refactoring tools can have a greater impact on Fortran programs than they have for Java or Smalltalk for a number of reasons. For one thing, Fortran is a more complex language than either. More bookkeeping needs to be done to keep declarations consistent than in some of the newer languages. And, Fortran has gone through three of four major transformations over the years. There is a lot of Fortran code still mired in the Fortran 66 “GOTOs considered necessary” era. Refactoring tools are ideal for modernizing this kind of code. Fortran 77 code uses arrays for nearly every kind of abstraction, modern data structures weren’t introduced until Fortran 90. Finally, Fortran 2003 will be adding support for objects. Object-oriented transformations play a central role in Java refactoring tools. We’re looking forward to refactoring Fortran to objects one day not to far in the distant future.

We recently did a presentation where we took a small piece of vintage Fortran code, a “dowdy dusty deck”, and performed what we called a “Total Code Makeover” on it. Incrementally, one step at a time, we brought this program into the seventies, then the nineties, and then in to the realm of tomorrow’s object-oriented Fortran code.

NCSA: So you had to learn Fortran?

TJP2: Not at all. I’m an old Fortran hand. I wrote my first Fortran program on punch cards back during the Nixon administration, right across the street from here at DCL. That was before they added the second addition, the one that looks like a minimum security cellblock. I spent a number of years doing scientific programming in Fortran for a group that was trying to read minds with brainwaves over at the Psychology Dept. They never got that far, but they were well-funded. Once of my greatest frustrations there was that none of the purportedly more modern programming languages that became available at the time were as well-suited to scientific programming as the Fortran 66 descendents we were using. Still, I envied those who were able to program in fancier, frillier languages like Pascal, C, and then C++. Nonetheless, no other language dealt with arrays as well, or as efficiently as Fortran. That’s still pretty much the case. And, in the mean time, Fortran has evolved to match the feature sets of many of its successors.

NCSA: Well, there are some who’d pay a small fortune for a tool like the one your building, but yours is free, right?

TJP2: That’s right. Lock-stock-and-barrel / Hook-line-and-sinker. You can find our tool at http://www.photran.org. We’d be delighted to hear from any of your readers who’d be interesting in testing and using our tool. And they’re welcome to contribute too.

NCSA: Well, this sounds like fascinating work. Thanks you for your time.

TJP2: And thank you. That URL again is: http://www.photran.org...

So, what was Refactoring's Original Sin? Letting the idea be framed as a distinct phase, a polishing pass, an optional brush up, where working code was turned into "higher quality" working code that worked the same way as the original working code.

Framed this way, this could sound like an utterly optional spasm of indulgent, flamboyant, narcissistic navel gazing by self-indulgent prima-dona programmers, rather than a inherent, indispensable, vital element of a process that cultivated the growth and sustained health of both the codebase and the team.

So, what was Refactoring's Original Sin? Letting the idea be framed as a distinct phase, a polishing pass, an optional brush up, where working code was turned into "higher quality" working code that worked the same way as the original working code.

Framed this way, this could sound like an utterly optional spasm of indulgent, flamboyant, narcissistic navel gazing by self-indulgent prima-dona programmers, rather than a inherent, indispensable, vital element of a process that cultivated the growth and sustained health of both the codebase and the team.

The primacy of code, and of runtime mechanisms as a condign recognition of the intricacy and fallibility of code was a central theme of my

The primacy of code, and of runtime mechanisms as a condign recognition of the intricacy and fallibility of code was a central theme of my

And, In the glamorous and exotic world of object-oriented world of object-oriented software style, of code couture, if you will, the very best designers, the doyens of design, are known, as in the fashion world, by a single name: Kent, Ward, Ralph, Rebecca, and ... Martin.

And, In the glamorous and exotic world of object-oriented world of object-oriented software style, of code couture, if you will, the very best designers, the doyens of design, are known, as in the fashion world, by a single name: Kent, Ward, Ralph, Rebecca, and ... Martin.

I use the term "heritage" advisedly. The more customary term is "legacy". That term, is of course laden with negative connotations. And indeed, in the case of most of this code, most see little worth boasting about. The sheer burden of this accumulating legacy, however, is finally, belatedly, beginning to alter the way we think about software development, both in the academy, and in industry.

I use the term "heritage" advisedly. The more customary term is "legacy". That term, is of course laden with negative connotations. And indeed, in the case of most of this code, most see little worth boasting about. The sheer burden of this accumulating legacy, however, is finally, belatedly, beginning to alter the way we think about software development, both in the academy, and in industry.

Today, the green fields a gone; the frontier is closed. In Illinois, only a few thousand acres of virgin prairie remain. Today’s developers are confronted by construction sites than have seen extensive prior development. Instead of green fields, they must master broken field running, avoiding, or otherwise coming to terms with existing obstacles. Some sites are so devastated as to be eligible for Superfund status...

Today, the green fields a gone; the frontier is closed. In Illinois, only a few thousand acres of virgin prairie remain. Today’s developers are confronted by construction sites than have seen extensive prior development. Instead of green fields, they must master broken field running, avoiding, or otherwise coming to terms with existing obstacles. Some sites are so devastated as to be eligible for Superfund status...

Does software have a shape? Cope asked this years ago…

Does software have a shape? Cope asked this years ago…

Construction sites are always muddy. Kitchens are always

Construction sites are always muddy. Kitchens are always  People want assembly to be antiseptic, and free. It ain't. It takes time, and skill, to fit materials to the site, and the task, and craft them into an integrated whole. People don't want to live or work in prefab trailer park modules. They want real homes, real stores, real offices.

People want assembly to be antiseptic, and free. It ain't. It takes time, and skill, to fit materials to the site, and the task, and craft them into an integrated whole. People don't want to live or work in prefab trailer park modules. They want real homes, real stores, real offices.

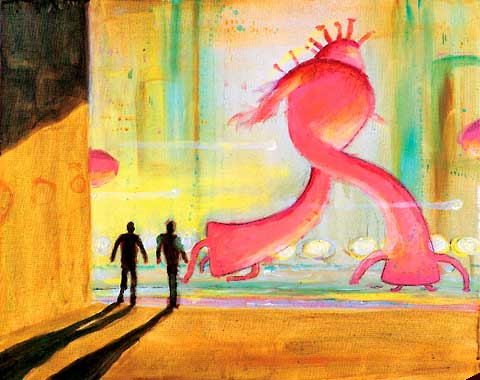

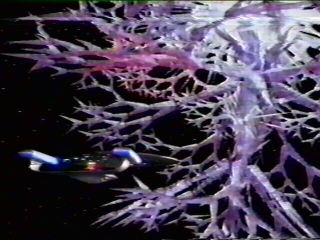

I recalled the climax of Arthur Clarke’s 2010: Something Wonderful is About to Happen. It feels as if Moore’s Law has been treading water of late, expending calories on a phase transition, as if melting ice, rather than generating predictable increases in raw heat. It feels as if, after a period of relative stagnation, things are about to change. It feels exciting. 2010 culminated with a new sun in the sky alongside the old. Jupiter transformed through fusion. The sky was yellow and the sun was blue. I wonder what color our new sun will really turn out to be. But the timeframe is starting to sound about right.

I recalled the climax of Arthur Clarke’s 2010: Something Wonderful is About to Happen. It feels as if Moore’s Law has been treading water of late, expending calories on a phase transition, as if melting ice, rather than generating predictable increases in raw heat. It feels as if, after a period of relative stagnation, things are about to change. It feels exciting. 2010 culminated with a new sun in the sky alongside the old. Jupiter transformed through fusion. The sky was yellow and the sun was blue. I wonder what color our new sun will really turn out to be. But the timeframe is starting to sound about right.

Like U.S. Grant, Microsoft is fighting its customary unrelenting war of attrition. Siege tactics. Lastest with the

Like U.S. Grant, Microsoft is fighting its customary unrelenting war of attrition. Siege tactics. Lastest with the  Nonetheless, I felt like we were served left-overs, carrion, and I craved something more meaty, and more fresh. It seemed as if Rick had left his A-Game at home in Seattle. I wanted to run out to try to find Rick Rashid’s dog, to see if I could cajole him into coughing up the talk I wanted to hear instead...

Nonetheless, I felt like we were served left-overs, carrion, and I craved something more meaty, and more fresh. It seemed as if Rick had left his A-Game at home in Seattle. I wanted to run out to try to find Rick Rashid’s dog, to see if I could cajole him into coughing up the talk I wanted to hear instead...

Alan Kay has had what, by anybody's standards, would have to be called a

good year. He recently bagged the Draper and Kyoto Prizes, and

is poised to deliver his Turing Award lecture tomorrow night.

His praises have been duly and extensively sung elsewhere;

let it suffice to say that he has more than ample laurels upon which

to rest, should he have so desired.

Alan Kay has had what, by anybody's standards, would have to be called a

good year. He recently bagged the Draper and Kyoto Prizes, and

is poised to deliver his Turing Award lecture tomorrow night.

His praises have been duly and extensively sung elsewhere;

let it suffice to say that he has more than ample laurels upon which

to rest, should he have so desired.

Software Maintenance: People percieve maintainers sort of as software busboys; cleaning up after the designer and analyst have eaten. Academic work in this area seems to have been retarded by the fact that people seem reluctant to become the world's most famous busboy...

Software Maintenance: People percieve maintainers sort of as software busboys; cleaning up after the designer and analyst have eaten. Academic work in this area seems to have been retarded by the fact that people seem reluctant to become the world's most famous busboy...